Below is an alternative to getting Google search results with Scrapy. As Scrapy installaton on windows as well as the dependencies may pose an issue, this alternative make use of the more lightweight crawler known as Pattern. Unlike the scrapy version, this require only Pattern module as dependency. The script can be found at GitHub.

Similar to the previous Scrapy post, it focus on scraping the links from the Google main page based on the search keyword input. For this script, it will also retrieve the basic description generated by Google. The advantage of this script is that it can search multiple keywords at the same time and return a dict containing all the search key as keys and result links and desc as value. This enable more flexibility in handling the data.

It works in similar fashion to the Scrapy version by first forming the url and use the Pattern DOM object to retrieve the page url and parse the link and desc. The parsing method is based on the CSS selectors provided by the Pattern module.

def create_dom_object(self):

""" Create dom object based on element for scraping

Take into consideration that there might be query problem.

"""

try:

url = URL(self.target_url_str)

self.dom_object = DOM(url.download(cached=True))

except:

print 'Problem retrieving data for this url: ', self.target_url_str

self.url_query_timeout = 1

def parse_google_results_per_url(self):

""" Method to google results of one search url.

Have both the link and desc results.

"""

self.create_dom_object()

if self.url_query_timeout: return

## process the link and temp desc together

dom_object = self.tag_element_results(self.dom_object, 'h3[class="r"]')

for n in dom_object:

## Get the result link

if re.search('q=(.*)&(amp;)?sa',n.content):

temp_link_data = re.search('q=(.*)&(amp;)?sa',n.content).group(1)

print temp_link_data

self.result_links_list_per_keyword.append(temp_link_data)

else:

## skip the description if cannot get the link

continue

## get the desc that comes with the results

temp_desc = n('a')[0].content

temp_desc = self.strip_html_tag_off_desc(temp_desc)

print temp_desc

self.result_desc_list_per_keyword.append(temp_desc)

self.result_link_desc_pair_list_per_keyword.append([temp_link_data,temp_desc])

print

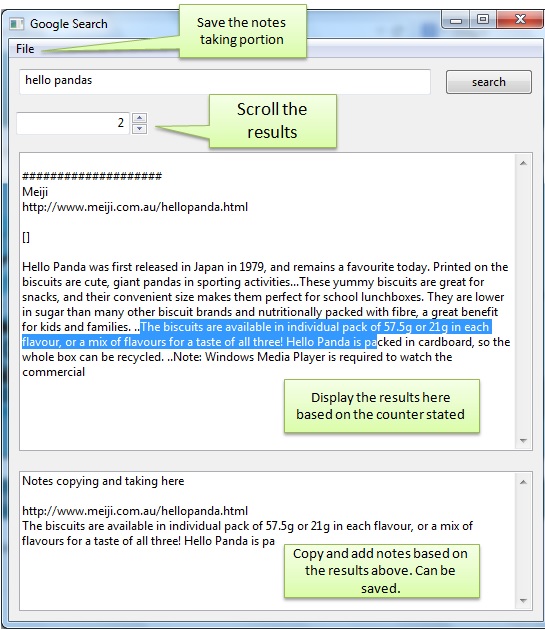

A sample run of the script is as below:

## User options

NUM_SEARCH_RESULTS = 5 # number of search results returned

search_words = ['tokyo go', 'jogging'] # set the keyword setting

## Create the google search class

hh = gsearch_url_form_class(search_words)

## Set the results

hh.set_num_of_search_results(NUM_SEARCH_RESULTS)

## Generate the Url list based on the search item

url_list = hh.formed_search_url()

## Parse the google page based on the url

hh.parse_all_search_url()

print 'End Search'

Output is as below:

================

Results for key: tokyo go=================

http://www.youtube.com/watch%3Fv%3DwLgSbo0YsN8

Tokyo Go | A Mickey Mouse Cartoon | Disney Shows – YouTubehttp://www.gotokyo.org/en/

Home / Official Tokyo Travel Guide GO TOKYOhttp://disney.wikia.com/wiki/Tokyo_Go

Tokyo Go – DisneyWikihttp://video.disney.com/watch/disneychannel-tokyo-go-4e09ee61b04d034bc7bcceeb

Tokyo Go | Mickey Mouse and Friends | Disney Videohttp://www.imdb.com/title/tt2992228/

"Mickey Mouse" Tokyo Go (TV Episode 2013) – IMDb================

Results for key: jogging================

http://en.wikipedia.org/wiki/Jogging

Jogging – Wikipedia, the free encyclopediajogging&num=100&client=firefox-a&rls=org.mozilla:en-US:official&channel=fflb&ie=UTF-8&oe=UTF-8&prmd=ivns&source=univ&tbm=nws&tbo=u

News for joggingjogging&oe=utf-8&client=firefox-a&num=100&rls=org.mozilla:en-US:official&channel=fflb&gfe_rd=cr&hl=en

Images for jogginghttp://www.wikihow.com/Start-Jogging

How to Start Jogging: 7 Steps (with Pictures) – wikiHowhttp://www.medicinenet.com/running/article.htm

Running: Learn the Facts and Risks of Jogging as Exercise