Python pattern is a good alternative to NLTK with its lightweight and extensive features in natural language processing. In addition, it also have the capability to act as a web crawler and able to retrieve information from twitter, facebook etc. The full functionality can be summarized as stated from their website:

“Pattern is a web mining module for the Python programming language.

It has tools for data mining (Google, Twitter and Wikipedia API, a web crawler, a HTML DOM parser), natural language processing (part-of-speech taggers, n-gram search, sentiment analysis, WordNet), machine learning (vector space model, clustering, SVM), network analysis and <canvas> visualization.”

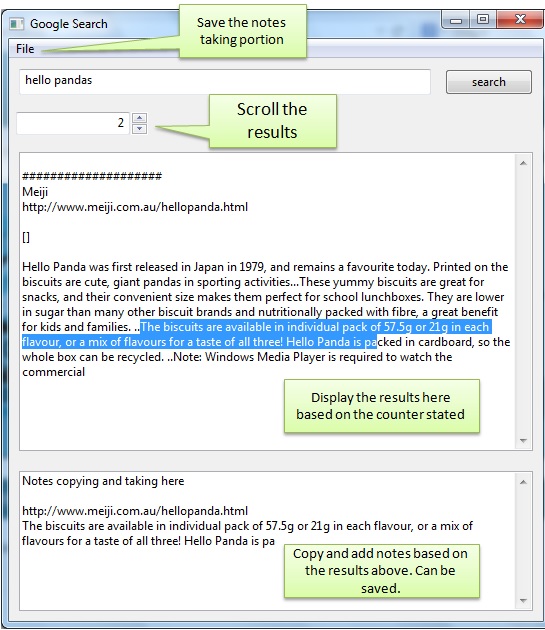

Below python script illustrate some of the functionality of Python Pattern. I intend to use some of the functions for the google search module developed previously.

The script crawl a particular website, get the plain text of the web page and processed it to remove short sentences (eg links) . After which it will get the top x number of high frequency words found in the web page. After which it will search for all the phrases in the text that contain the high frequency words.

The script still require a number of improvement. For example, keyword ‘turbine’ and ‘turbines’ should be same word and need to classify as one word.

import sys, os, time

from pattern.en import parse, Sentence, parsetree, tokenize

from pattern.search import search

from pattern.vector import count, words, PORTER, LEMMA, Document

from pattern.web import URL, plaintext

def get_plain_text_fr_website(web_address):

""" Scrape plain text from a web site.

Args:

web_address (str): web http address.

Returns:

(str): plain text in str.

"""

s = URL(web_address).download()

## s is html format.

return convert_html_to_plaintext(s)

def convert_html_to_plaintext(html):

""" Take in html and output as text.

Args:

html (str): str in html format.

Returns:

(str): plain text in str.

TODO: include more parameters.

"""

return plaintext(html)

def retain_text_with_min_sentences_len(raw_text,len_limit =6 ):

""" Return paragraph with sentences having certain number of length limit.

Args:

raw_text (str): text input in paragraphs.

len_limit (int): min word limit.

Returns:

(str): modified text with min words in sentence

"""

sentence_list = get_sentences_with_min_words(split_text_to_list_of_sentences(raw_text), len_limit)

return ''.join(sentence_list)

def split_text_to_list_of_sentences(raw_text):

""" Split the raw text into list of sentences.

Args:

raw_text (str): text input in paragraphs.

Returns:

(list): list of str of sentences.

"""

return tokenize(raw_text)

def get_sentences_with_min_words(sentences_list, len_limit):

""" Return list of sentences with number of words greater than specified len_limit.

Args:

sentences_list (list): sentences break into list.

len_limit (int): min word limit.

Returns:

(list): list of sentences with min num of words.

"""

return [n for n in sentences_list if word_cnt_in_sent(n) >= len_limit]

def word_cnt_in_sent(sentence):

""" Return number of words in a sentence. Use spacing as relative word count.

Count number of alphanum words after splitting the space.

Args:

sentence (str): Proper sentence. Can be split from the tokenize function.

Returns:

(int): number of words in sentence.

"""

return len([ n for n in sentence.split(' ') if n.isalnum()]) + 1

def retrieve_string(match_grp):

""" Function to retrieve the string from the pattern.search.Match class

Args:

match_grp (pattern.search.Match): match group

Returns:

(str): str containing the words that match

Note:

Does not have the grouping selector

"""

return match_grp.group(0).string

def get_top_freq_words_in_text(txt_string, top_count, filter_method = lambda w: w.lstrip("\'").isalnum(),exclude_len = 0):

""" Method to get the top frequency of words in text.

Args:

txt_string (str): Input string.

top_count (int): number of top words to be returned.

Kwargs:

filter_method (method): special character to ignore, in some cases numbers may also need to ignore.

pass in lambda function.

Default accept method that include only alphanumeric

exclude_len (int): exclude keyword if len less than certain len.

default 0, which will not take effect.

Returns:

(list): list of top words """

docu = Document(txt_string, threshold=1, filter = filter_method)

## Provide extra buffer if there is word exclusion

freq_keyword_tuples = docu.keywords(top=top_count )

## encode for unicode handliing

if exclude_len == 0:

return [n[1].encode() for n in freq_keyword_tuples]

else:

return [n[1].encode() for n in freq_keyword_tuples if not len(n[1])<=exclude_len]

def get_phrases_contain_keyword(text_parsetree, keyword, print_output = 0, phrases_num_limit =5):

""" Method to return phrases in target text containing the keyword. The keyword is taken as an Noun or NN|NP|NNS.

The phrases will be a noun phrases ie NP chunks.

Args:

text_parsetree (pattern.text.tree.Text): parsed tree of orginal text

keyword (str): can be a series of words separated by | eg "cat|dog"

Kwargs:

print_output (bool): 1 - print the results else do not print.

phrases_num_limit (int): return the max number of phrases. if 0, return all.

Returns:

(list): list of the found phrases. (remove duplication )

TODO:

provide limit to each keyword.

"""

## Regular expression matching.

## interested in phrases containing the traget word, assume target noun is either adj or noun

target_search_str = 'JJ|NN|NNP|NNS?+ ' + keyword + ' NN|NNP|NNS?+'

target_search = search(target_search_str, text_parsetree)# only apply if the keyword is top freq:'JJ?+ NN NN|NNP|NNS+'

target_word_list = []

for n in target_search:

if print_output: print retrieve_string(n)

target_word_list.append(retrieve_string(n))

target_word_list_rm_duplicates = rm_duplicate_keywords(target_word_list)

if (len(target_word_list_rm_duplicates)>= phrases_num_limit and phrases_num_limit>0):

return target_word_list_rm_duplicates[:phrases_num_limit]

else:

return target_word_list_rm_duplicates

def rm_duplicate_keywords(target_wordlist):

""" Method to remove duplication in the key word.

Args:

target_wordlist (list): list of keyword str.

Returns:

(list): list of keywords with duplicaton removed.

"""

return list(set(target_wordlist))

if __name__ == '__main__':

## random web site for extraction.

web_address = 'http://en.wikipedia.org/wiki/Turbine'

## extract the plain text.

webtext = get_plain_text_fr_website(web_address)

## modified plain text so that it can remove those very short sentences (such as side bar menu).

modifed_text = retain_text_with_min_sentences_len(webtext)

## Begin summarizing the important pt of the website.

## first step to get the top freq words, here stated 10.

## Exclude len will remove any length less than specified, here stated 2.

list_of_top_freq_words = get_top_freq_words_in_text(modifed_text, 4, lambda w: w.lstrip("'").isalpha(),exclude_len = 2)

print list_of_top_freq_words

## >> ['turbine', 'turbines', 'fluid', 'impulse']

## Parse the whole document for analyzing

## The pattern.en parser groups words that belong together into chunks.

##For example, the black cat is one chunk, tagged NP (i.e., a noun phrase)

t = parsetree(modifed_text, lemmata=True)

## get target search phrases based on the top freq words.

for n in list_of_top_freq_words:

print 'keywords: ', n

print get_phrases_contain_keyword(t, n)

print '*'*8

##>> keywords: turbine

##>> [u'the Francis Turbine', u'the marine turbine', u'most turbines', u'impulse turbines .Reaction turbines', u'turbine']

##>> ********

##>> keywords: turbines

##>> [u'de Laval turbines', u'possible .Wind turbines', u'type .Very high efficiency steam turbines', u'conventional steam turbines', u'draft tube .Francis turbines']

##>> ********

##>> keywords: fluid

##>> [u'a fluid', u'working fluid', u'a high velocity fluid', u'fluid', u'calculations further .Computational fluid']

##>> ********

##>> keywords: impulse

##>> [u'equivalent impulse', u'impulse', u'Pressure compound multistage impulse', u'de Laval type impulse', u'traditionally more impulse']

##>> ********

</pre>

<pre>